Quick Start

In this quick start guide, we show how to build a RAG application with LlamaCloud. We'll setup an index via the no-code UI, and integrate the retrieval endpoint in a Colab notebook.

Prerequisites

- Sign up for an account

- Prepare an API key for your preferred embedding model service (e.g. OpenAI).

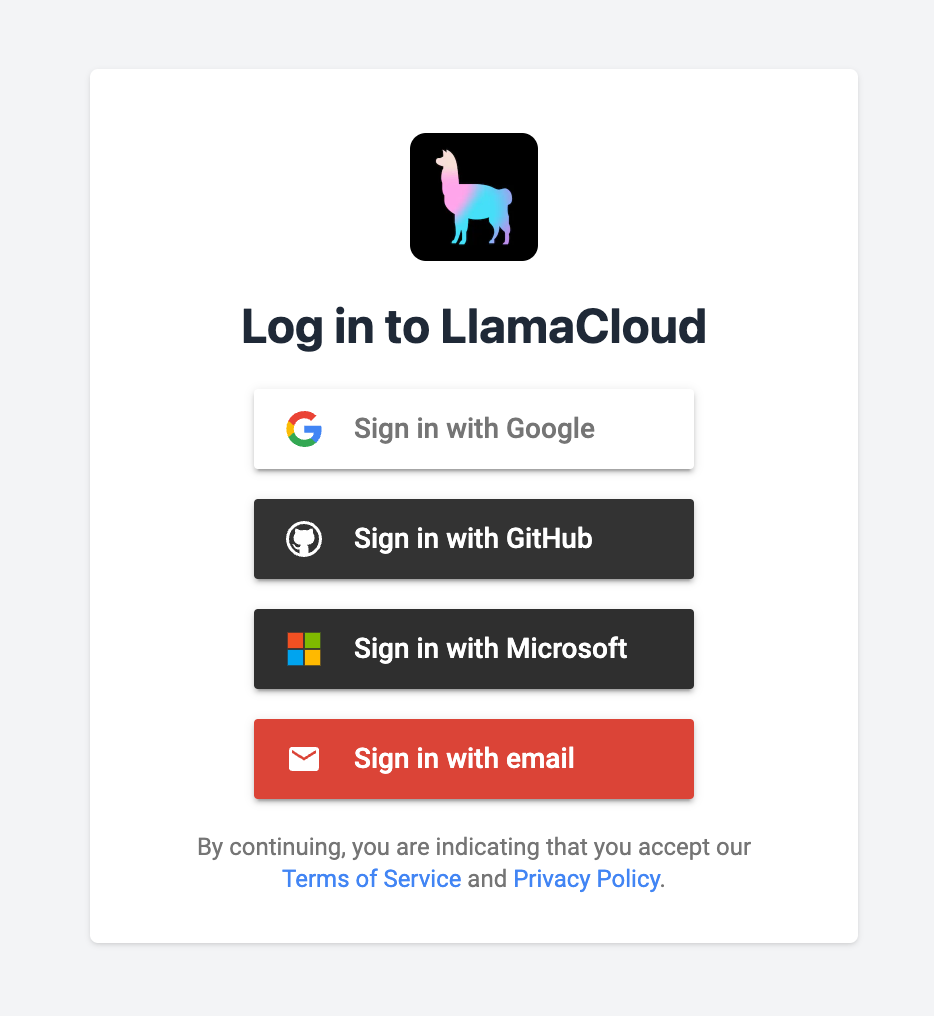

Sign in

Sign in via https://cloud.llamaindex.ai/

You should see options to sign in via Google, Github, Microsoft, or email.

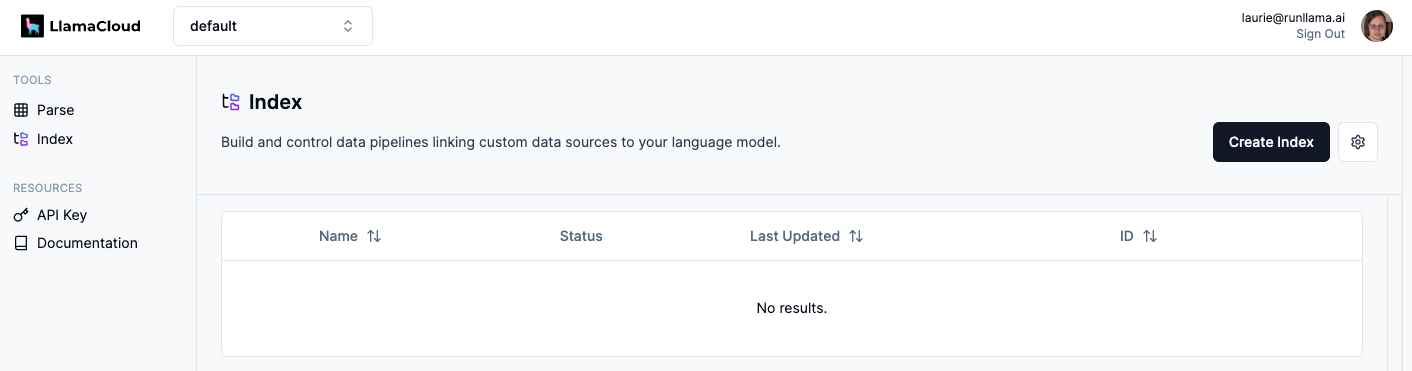

Set up an index via UI

Navigate to Index feature via the left navbar.

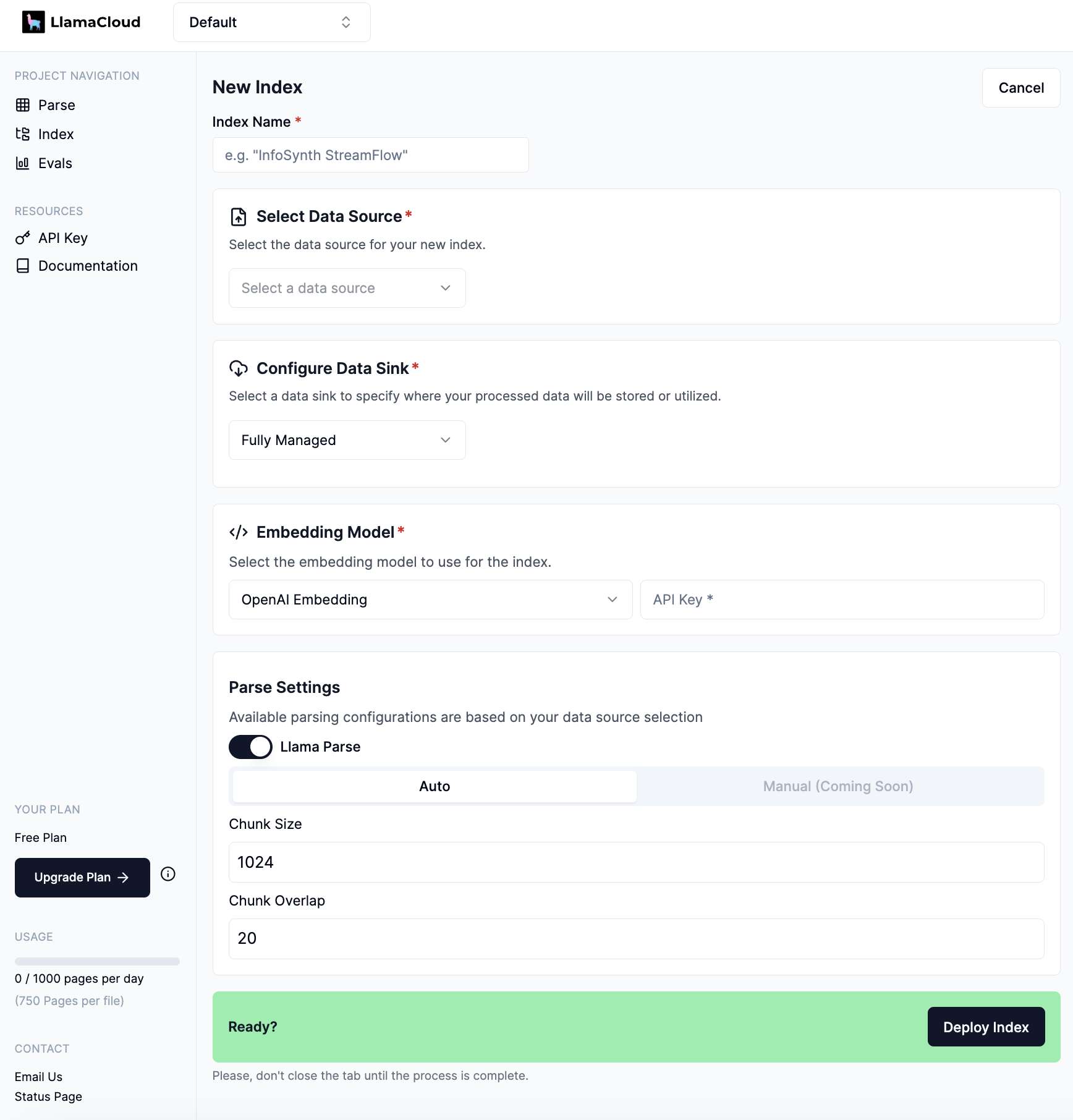

Click the Create Index button. You should see a index configuration form.

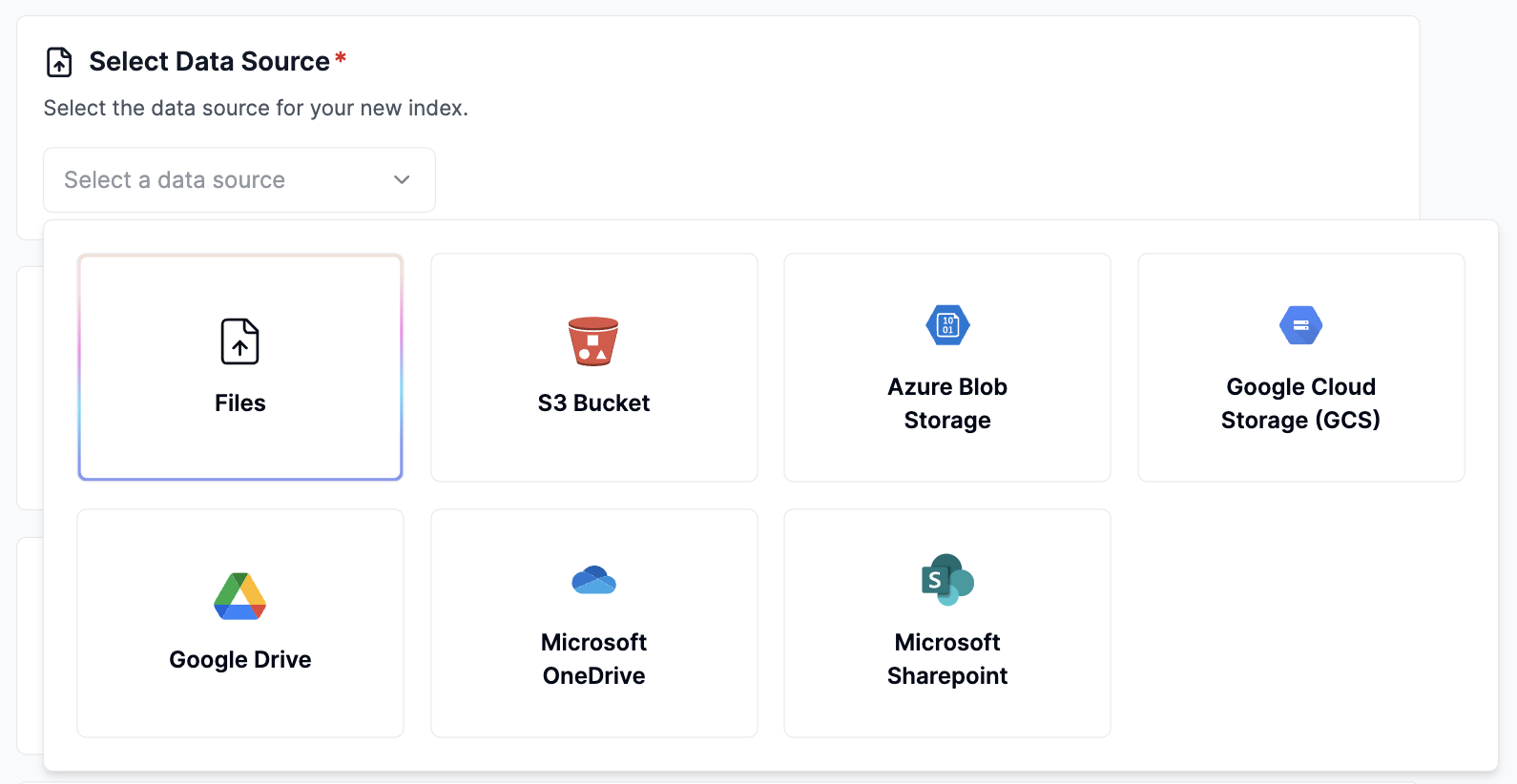

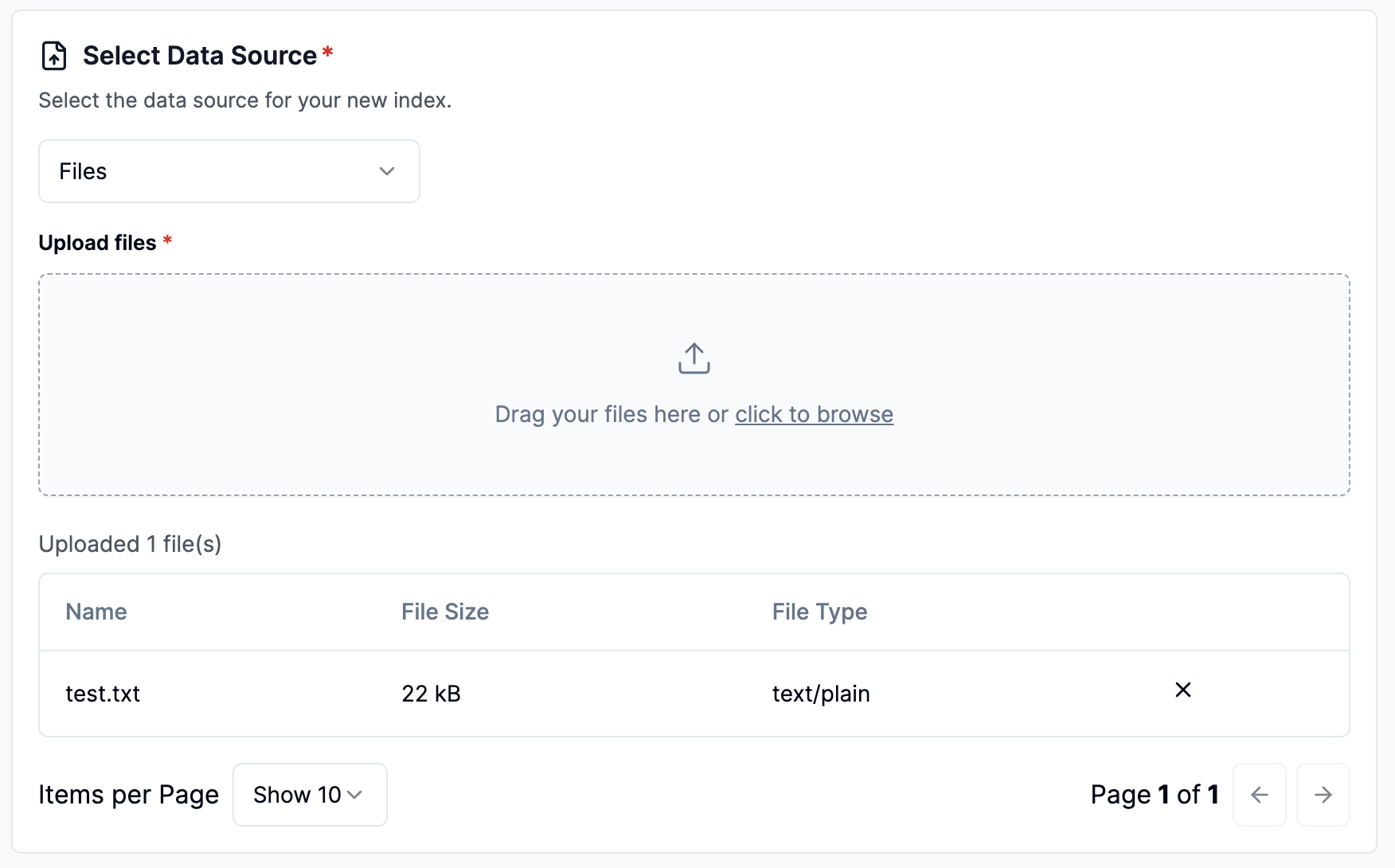

Configure data source - file upload

Click Select a data source dropdown and select Files

Drag files into file pond or click to browse.

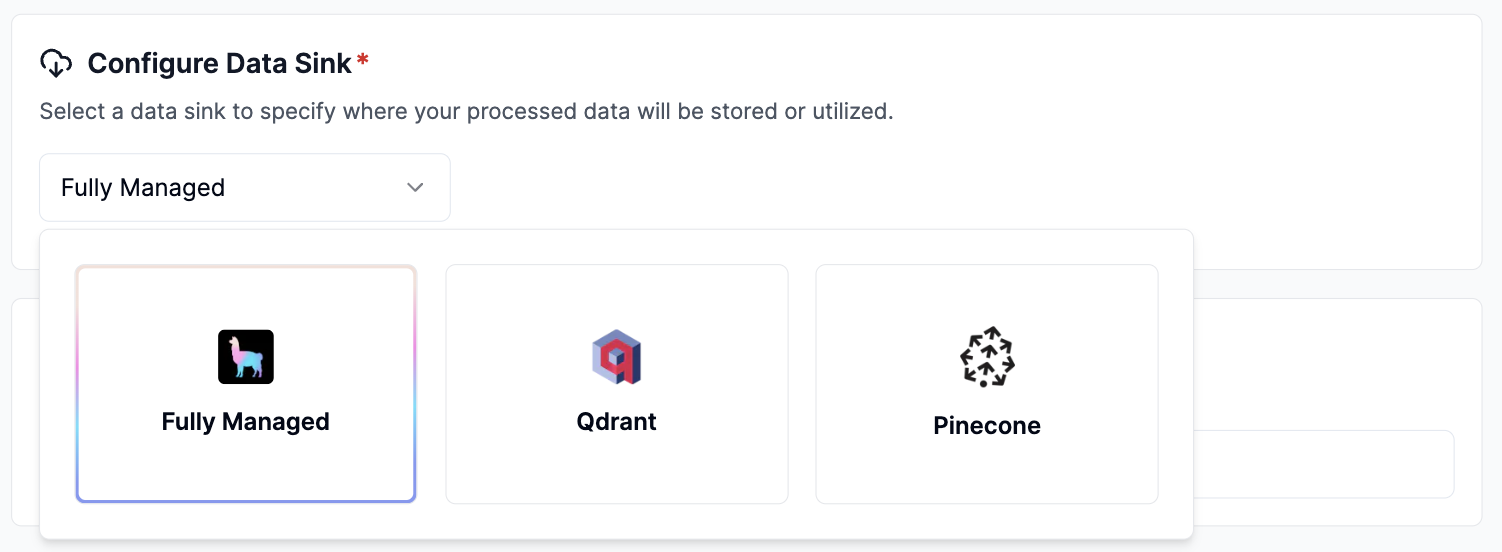

Configure data sink - managed

Select Fully Managed data sink.

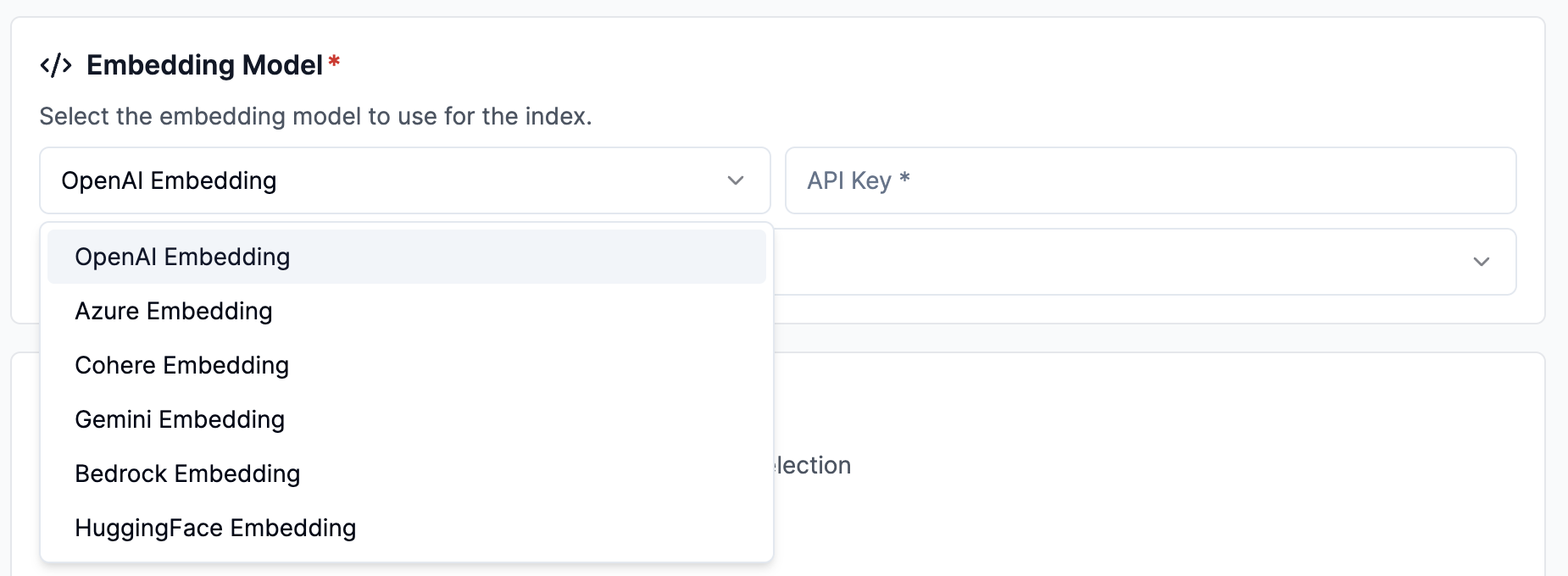

Configure embedding model - OpenAI

Select OpenAI Embedding and put in your API key.

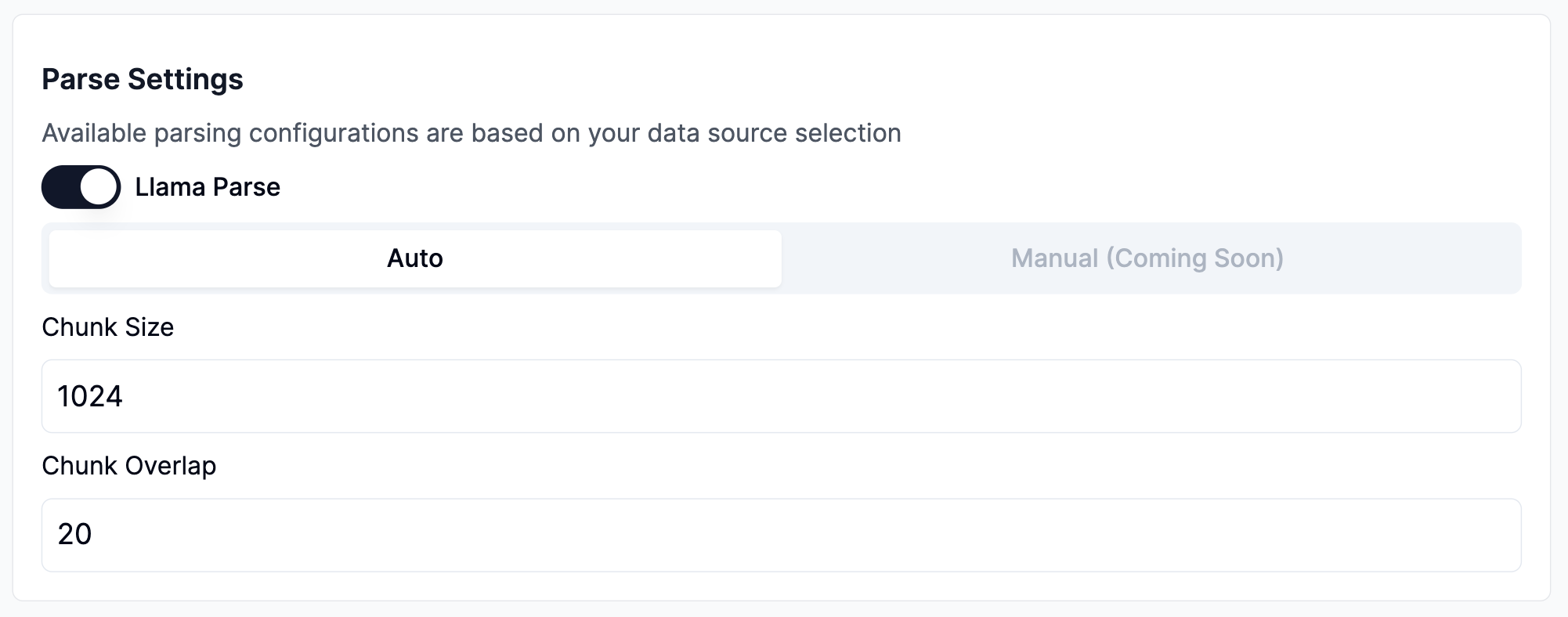

Configure parsing & transformation settings

Toggle to enable or disable Llama Parse.

Select Auto mode for best default transformation setting (specify desired chunks size & chunk overlap as necessary.)

Manual mode is coming soon, with additional customizability.

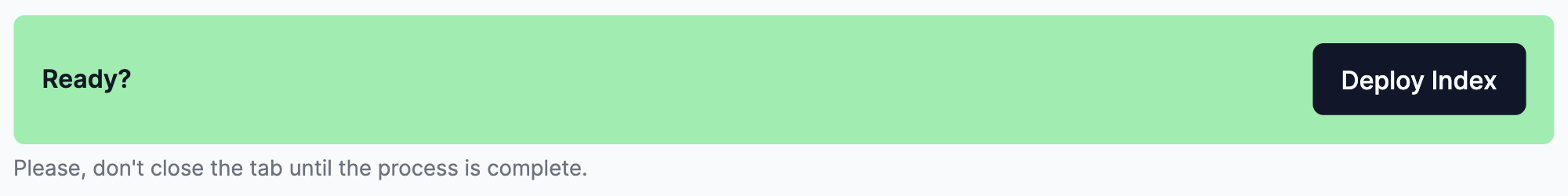

After configuring the ingestion pipeline, click Deploy Index to kick off ingestion.

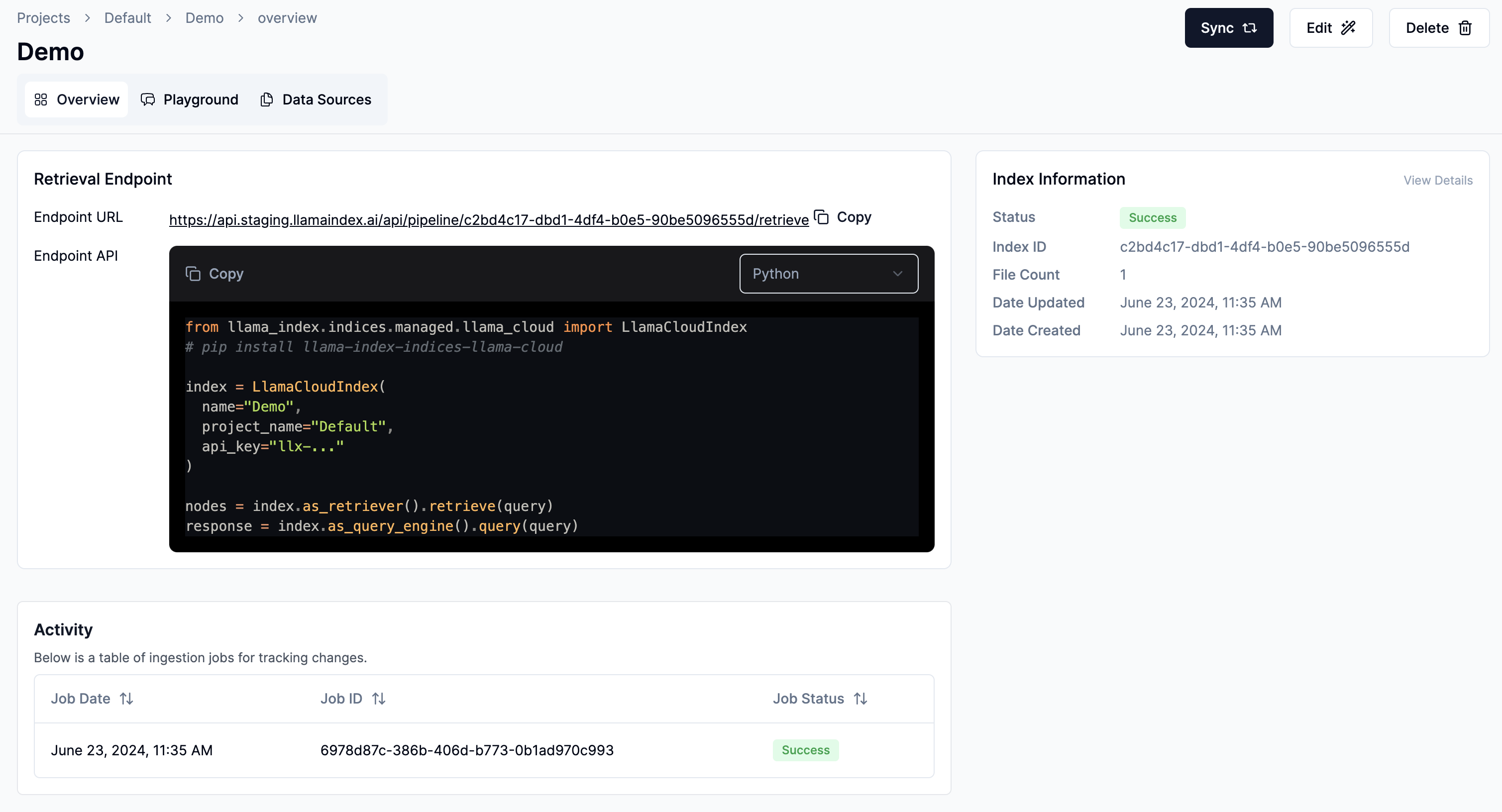

(Optional) Observe and manage your index via UI

You should see an index overview with the latest ingestion status.

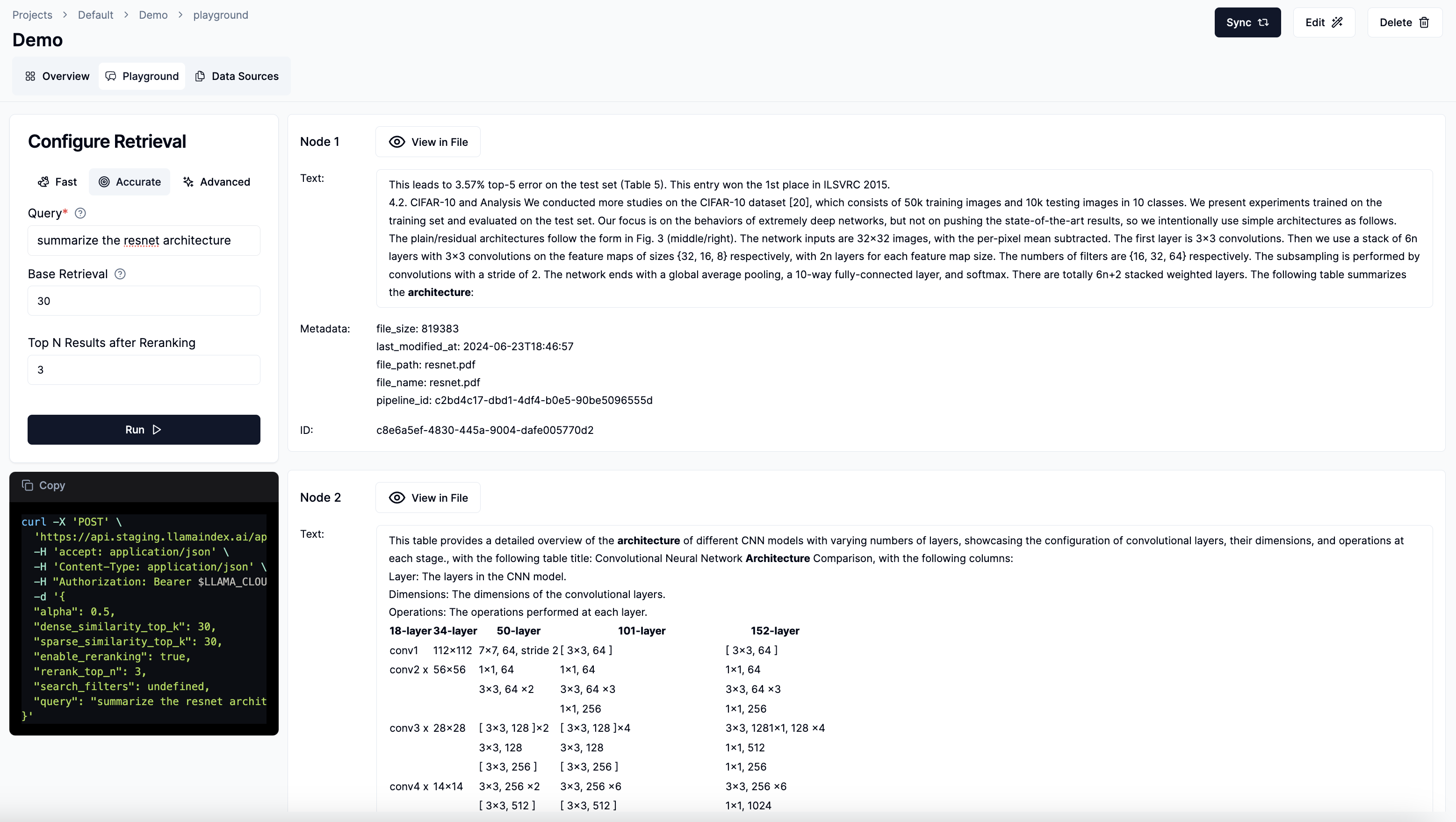

(optional) Test retrieval via playground

Navigate to Playground tab to test your retrieval endpoint.

Select between Fast, Accurate, and Advanced retrieval modes.

Input test query and specify retrieval configurations (e.g. base retrieval and top n after re-ranking).

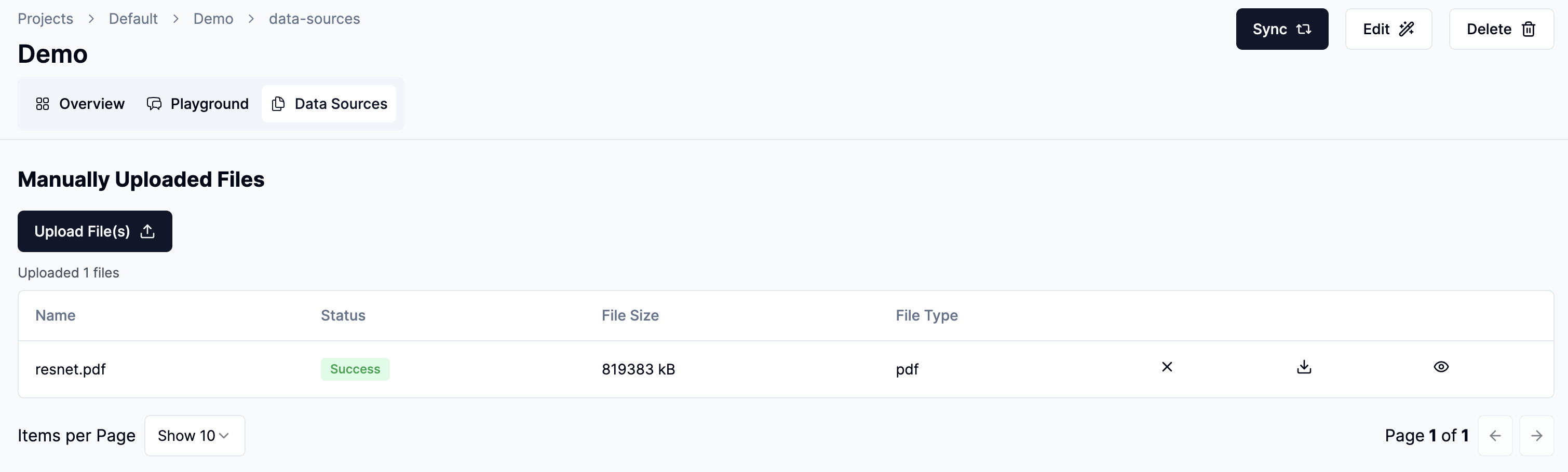

(optional) Manage connected data sources (or uploaded files)

Navigate to Data Sources tab to manage your connected data sources.

You can upsert, delete, download, and preview uploaded files.

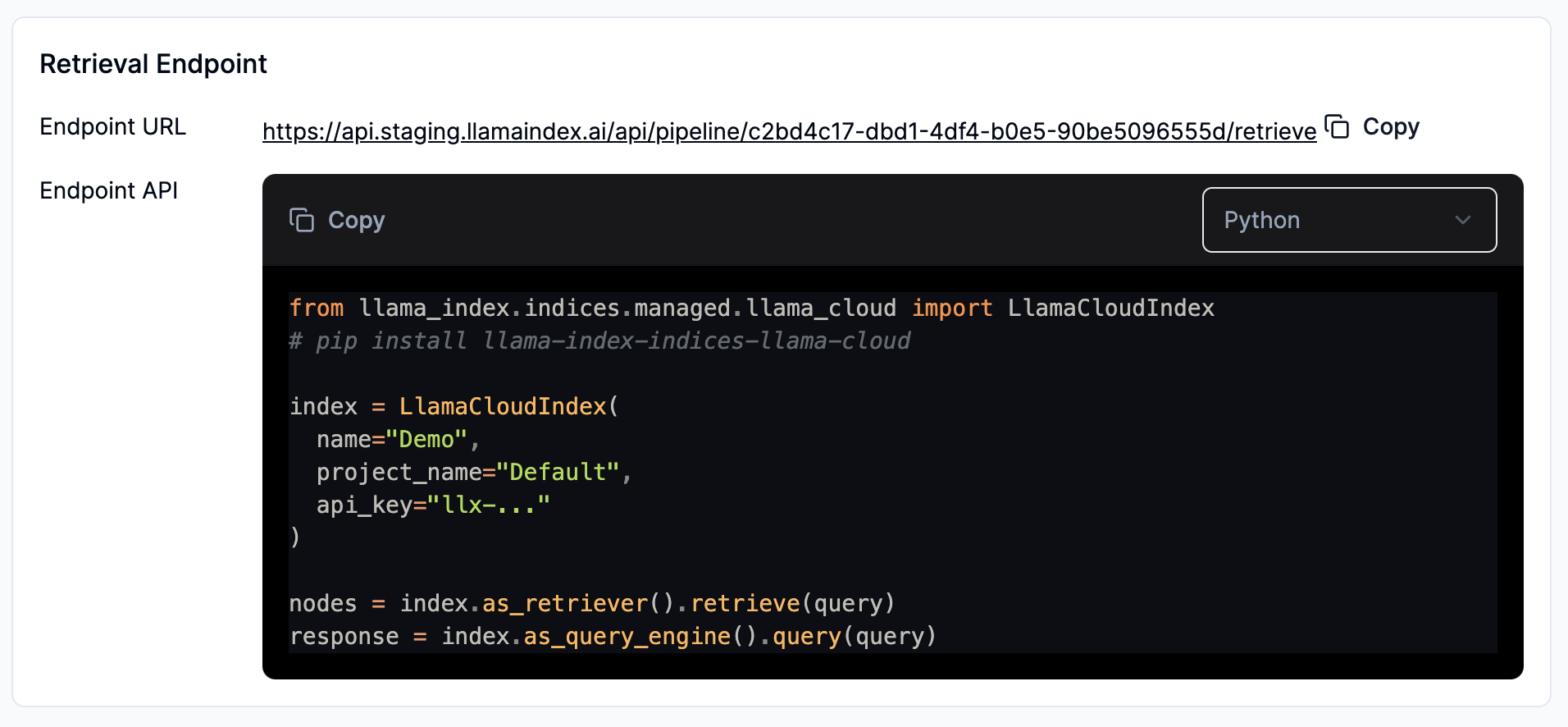

Integrate your retrieval endpoint into RAG/agent application

After setting up the index, we can now integrate the retrieval endpoint into our RAG/agent application. Here, we will use a colab notebook as example.

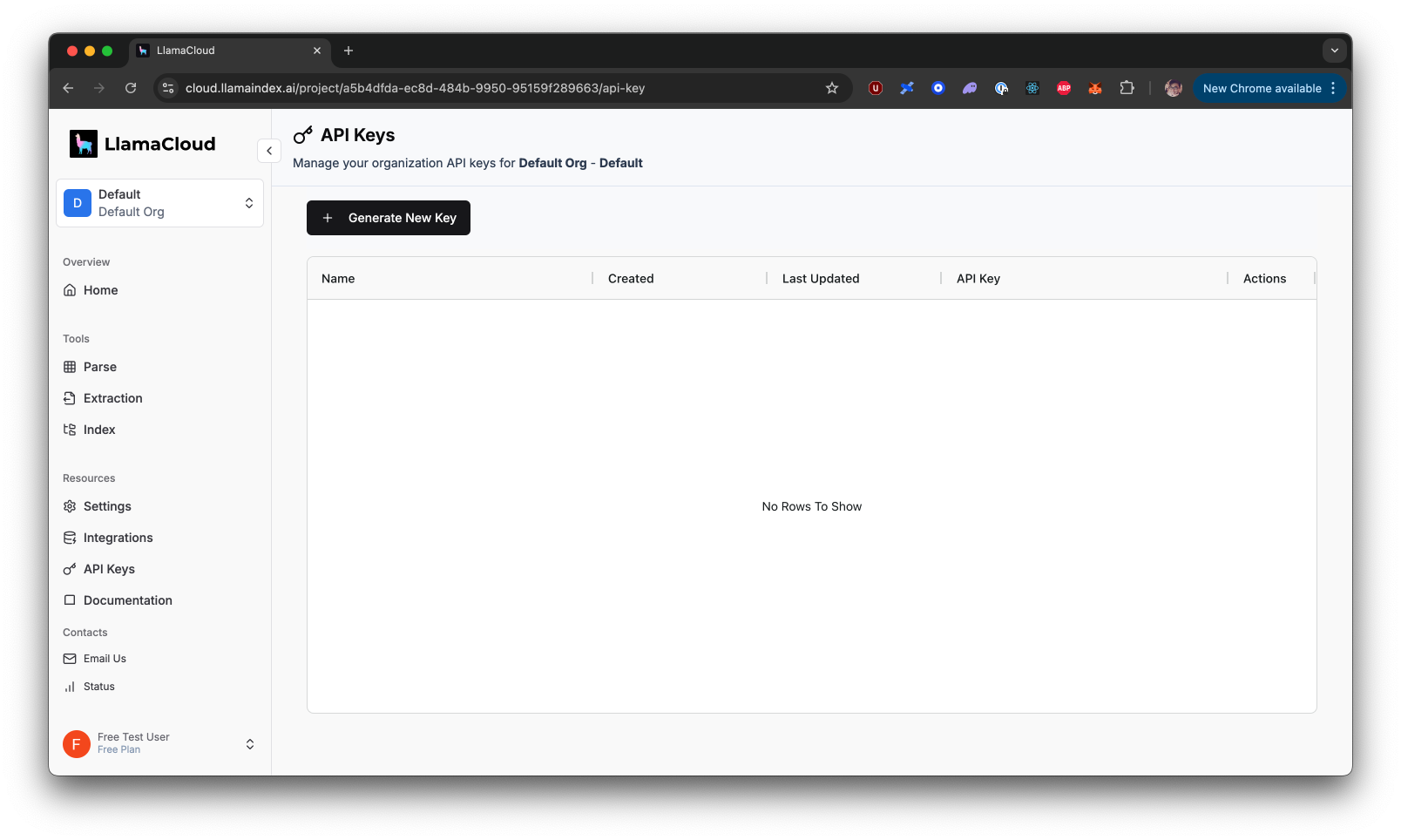

Obtain LlamaCloud API key

Navigate to API Key page from left sidebar. Click Generate New Key button.

Copy the API key to safe location. You will not be able to retrieve this again. More detailed walkthrough.

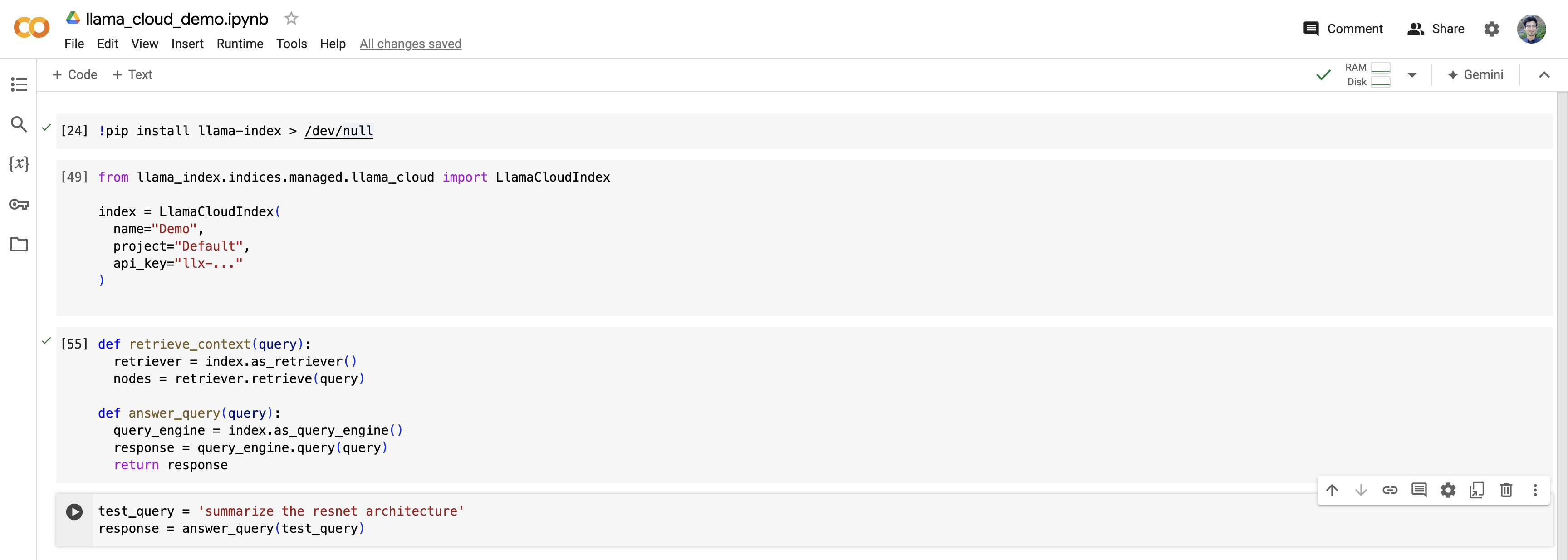

Setup your RAG/agent application - python notebook

Install latest python framework:

pip install llama-index

Navigate to Overview tab. Click Copy button under Retrieval Endpoint card

Now you have a minimal RAG application ready to use!

You can find demo colab notebook here.